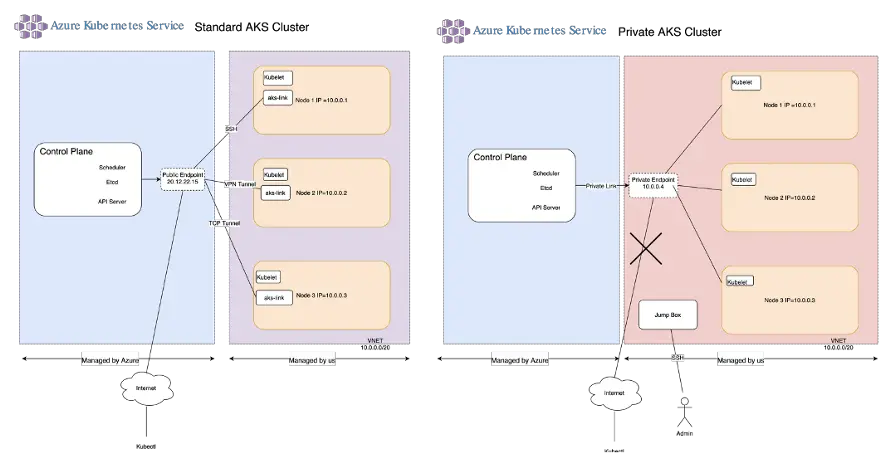

A Standard Azure Kubernetes Service cluster has a public IP address for the API Server and a Private AKS cluster has a private IP address for its API server endpoint. In both the Standard AKS Cluster and the Private AKS cluster the Worker Nodes have a Private IP address.

For those looking for the long answer.

Let’s start by drawing the components of an Azure Kubernetes Cluster, which are the same for any Kubernetes cluster, either from Azure, Amazon, or Google.

Let’s start with a cluster with three nodes.

So far nothing special right?

We have a Scheduler for, scheduling workloads in the different nodes. There is also the API Server, that is used to administer Kubernetes, and then we also have Etcd.

Etcd is a simple database for Kubernetes to store configuration stored as key-value pairs and all of this, is inside the Control Plane.

And then we have the Worker Nodes.

The way a Kubernetes cluster works is, that in each worker node there’s a Kubelet.

A Kubelet is a component that receives instructions from the API server.

It facilitates communication between the API server and the worker nodes.

In Azure, all the worker nodes are inside a VNET.

So let’s draw that okay?

So now we have a VNET here.

Let’s just randomly select an IP address range for the VNET. I’m going to pretend that I’ve configured my VNET with 10.0.0.0 with a CIDR of, let’s say /20. This gives us around 4000 IP addresses.

I’m going to assign now an IP address to each node.

Now we have three IP addresses assigned. You’ll notice that the Control Plane is not inside the VNET.

This is because, in Azure, we don’t manage the Control Plane. The Control Plane is managed by Azure and runs in its own virtual network.

The question is, how does the Control Plane connect to these nodes?

In a Standard AKS cluster, there is a Public IP address for the Control Plane.

I’m also going to create a random public IP address for the public API endpoint as an example.

So it’s a public endpoint. It’s important to note that these nodes here are regular VMs.

But these VMS do not have a public IP address. They only have an IP address defined within the VNET.

This means that we can’t access these VMS from outside the VNET in a regular fashion.

So there is no way for the AKS Control Plane to connect directly to the Kubelet in each worker node. Because the Kubelet’s are running inside Worker Nodes as pods and since the nodes only have a private IP address, the Kubelets will not be reachable from outside the VNET. That is, without resorting to a load balancer or some other trick. Which is exactly what we are going to talk about.

The Worker Nodes are managed by the Customer. And the Control plane is managed by Azure.

A Standard AKS Cluster is by default more secure than a standard cluster in Google GKE.

Let me justify what I just said.

In GCP for example, when you create a Standard Kubernetes cluster, each Worker Node has a public IP address assigned, in addition to a private IP address.

So, from a point of view of security, the Standard Azure AKS cluster is by default more secure than a GCP GKE Cluster with default settings.

Going back to our original problem.

How does Azure enable the connection between the control plane and the worker nodes?

We have here a control plane exposed by a public endpoint which is used to issue commands to kubectl.

How can we connect the Control plane to the Worker Nodes so that we can control them?

In Azure Kubernetes Service you might see three different types of pods running in each Worker node that is used to connect the Azure Control Plane to the Worker Nodes.

To connect the control plane to the worker nodes we need to use tunnels and these tunnels need to be initiated by the Worker Nodes.

AKS is changing very quickly so you will find different versions of this tunneling. To find out what version of tunneling your cluster is running, you can run the following command:

kubectl get pods -n kube-system

Tunnelfront

If you see pods running in your cluster with a name starting in tunnelfront-, then you know that your cluster is running Tunnelfront. With Tunnelfront, it’s the worker nodes that initiate the connection to the control plane using the public endpoint. So while the nodes are running, there’s an SSH connection open initiated from the Worker Nodes to the Control Plane.

The control plane is able in this way to execute commands via the SSH tunnel.

AKS-Link

Tunnelfront is already being phased out, In a more recent version of AKS you might see instead of another type of pod which is called AKS Link.

If your cluster has AKS link, the main difference is that instead of using an SSH tunnel, a VPN tunnel (using OpenVPN) is established between the worker nodes and the control plane.

The concept is the same as for Tunnelfront. The VPN tunnel opens a secure connection, which is initiated by the worker node to the control plane. The main advantage over Tunnelfront is that with OpenVPN you can prevent man-in-the-middle attacks as the worker node can validate the identity of the control plane using SSL certificates.

Even though the Worker nodes are inside the VNET, and only have private IP addresses, they do have access to the public internet. In this way, they can reach the control plane via the public IP address.

AKS Konnectivity

Very recently Azure has introduced Konnectivity, to replace AKS-Link.

Azure is deprecating tunnel-front and aks-link and instead of that, they’re replacing it with Konnectivity.

The role of Konnectivity is the same as of Tunnefront and AKS-Link. But instead of a vpn tunnel, Konnectivity uses a TCP level proxy.

In the case of Konnectivity, the Konnectivity-agent runs inside the worker nodes and the Konnectivity-server runs inside the control plane.

If you are using a more recent version of the Kubernetes then you might see a Konnectivity agent running inside the Worker nodes, under the kube-system namespace.

And this creates a TCP level proxy, similar to a VPN.

Konnectivity is an improved version of aks-link and is based on the out-of-the-box kubernetes component called Konnectivity.

But regardless of the implementation, the idea is the same. To establish a connection between a Control Plane, managed by Azure, with Worker Nodes running in a private VNET.

This has to be done via the public IP address of the Control Plane, and the connection is initiated by the worker nodes.

Should we worry about the fact that we are using a public ip address?

Does that not mean that communication between Control Plane and Worker Nodes can be intercepted, via a man-in-the-middle attack?

The Control Planes and worker nodes should be inside the same Azure data center (availability zone). No packets exchanged between the Control Plane and worker-node should need to traverse the public internet. Furthermore, for a man-in-the-middle attack to work, somehow the attacker needs to pretend they are a Control Plane. This means that the attacker needs to have access to the Control Plane private SSL certificates. Unlikely but possible.

What’s the purpose of the control plane and what can we do with the public endpoint?

The public endpoint is there to facilitate the administration of the cluster via the control plane using kubectl. Kubectl is also used to deploy Kubernetes applications.

In a typical CI/CD workflow, kubectl is used by Gitlab CI, github CI or Jenkins to deploy Kubernetes applications.

Because the Control Plane is exposed via public IP, it makes it very convenient to integrate it with Cloud CI/CD tooling, from anywhere on the internet.

AKS by default has authentication enabled. If you need to authenticate to the cluster, you need to have the necessary k8s credentials.

Once you have your credentials you can then connect to the cluster using kubectl.

This is not very secure, because, if someone is able to steal the k8s credentials then they’ll be able to connect to the cluster and do whatever they want with it. It could also happen that there is a vulnerability in the Kubernetes cluster which might not even require authentication.

Yes, you can also control access to the API server by using IP whitelisting. That helps to a certain extent.

This type of k8s cluster will not suit every company out there, that is worried about security.

For instance, a bank would not want to expose a k8s cluster on the internet as this would not be compliant with its security policies.

This is why Microsoft had to add a private AKS cluster as an option for customers who need that level of security.

In a private AKS cluster, all the communication between the control plane and worker nodes happens inside a private VNET.

It’s important to realize that all the communication between the control pane and the worker nodes, in a standard AKS cluster, is done via a tunnel which can be either SSH if using tunnelfront, or a VPN tunnel if using aks-link. Or it can be a TCP level proxy if the cluster is using Konnectivity.

The Worker Nodes are inside a VNET so the only way they can be contacted from the outside world is via the API server when using the kubectl command or if we create a load balancer.

Note that in AKS it is not possible to expose a pod using NodePort, because all the Worker Nodes have internal IP addresses only.

The drawback of this is that if someone is to steal your credentials, they will then be able to control the k8s cluster.

What is a Private Azure Kubernetes Cluster?

The main difference between a standard AKS class and a private AKS cluster is that in a private AKS cluster you have a private endpoint instead of a public endpoint.

The private endpoint has a private IP address allocated within the range of the VNET.

Because you have a private endpoint and the IP address itself is in the same subnet as the IP addresses for the worker nodes, there is no need to set up tunnels. But anyways, the tunnels are still there. They are not strictly needed but they are there.

After setting up Azure Bastion in the same VNET as the private AKS cluster I was able to verify this:

$ kubectl get pods -n kube-system

kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

azure-cni-networkmonitor-6sssf 1/1 Running 0 3h32m

azure-cni-networkmonitor-7qqa8 1/1 Running 0 3h33m

azure-cni-networkmonitor-mad2t 1/1 Running 0 3h32m

...

tunnelfront-65467fd9-jqn64 1/1 Running 0 3h36mAnd there you have it. tunnelfront pod is still running as a pod. It is still creating the tunnel between the control plane and the worker nodes but all communication is done via private ip, and via the Private Link.

Why is tunnelfront still needed? Good question, it might be still there because it still retains an important function to mediate communication via Grpc between the Control Plane and the Worker Nodes.

So the main difference to a Standard AKS Cluster is that the connection between the Control Plane and the Private Endpoint is done via a Private Link.

Because we have a private endpoint, it’s no longer possible to connect from the public internet to the private k8s API endpoint.

In order to access the control plane from the outside, you will need to use either a VPN, or if that is not possible you can access the API Server via a jump box, or more recently you can use Azure Bastion.

Although having a private AKS cluster is definitely best practice, from a security point of view, having a private AKS cluster makes it much more complicated to administer the cluster.

For example, setting up CI/CD gets trickier, particularly if you are using a cloud service like Github or Gitlab. This is because if you use a Gitlab/Github shared runner which is outside the private network, it has no access to the VNET where your AKS Cluster private endpoint is located. Yes, there are ways around it. But then you will be weakening security anyway.

And that’s about it. I hope you found this article useful. Now you should know the difference between a Standard and a private AKS cluster. Now you should be able to make the right decision and go with the one that suits your enterprise!

Comments

2 responses to “What is the difference between a Standard Azure AKS Cluster and a Private AKS Cluster?”

Hi, Nice post, is this statement in your post is correct? “Because you have a private endpoint and the IP address itself is in the same subnet as the IP addresses for the worker nodes, there is no need to set up tunnels.”

My understanding is private cluster will put the control pane in a separate VNET than the one where the nodes reside in. We would need a connection between them by means of private link to get it work. But the above statement you saying they are in same subnet and can access without any tunnel.

Hi Rajesh,

The main purpose of the tunnel in AKS is to allow connecting from the outside to the inside. The outside is the control plane, and on the inside of the VNET are the worker nodes. The communication has to be done via a public IP, hence it is less secure.

With a private AKS cluster this tunnel is not required for this type of connectivity, but maybe it might still be used in the private cluster for other purposes.

The control plane for the private cluster is in a separate VNET, however, there is a private link between the VNET where the control plane is hosted and the VNET where the Worker nodes are hosted. This private link connects a private endpoint to the control plane itself.

Because of this, there is no need to setup a tunnel, as the private endpoint is in the same VNET as the AKS worker nodes. All communication can be done via private ips.

I probably need to correct this article to make it more accurate, will do that soon.