GKE Autopilot is a fully managed Kubernetes cluster solution from Google, released last week in GA, that brings together all the best features in scaling, security, data operations the Google way. And the key to this new product, you only pay for the pods that you use, and the billing is based on the total of vCPU, memory and storage requested!

But hang on, was the “old” Google Kubernetes Engine not fully managed already?

GKE was indeed already a very hands-off Kubernetes cluster. With the standard GKE cluster, you don’t have to worry about the control planes as these are managed by Google. You also don’t have to worry about setting up storage providers and networking. Load balancers are created on-demand based on your ingresses, with secure cluster authentication switched on by default and the ability to auto-scale the number of nodes using the GKE cluster autoscaler. Automatic upgrades using release channels, etc.

So what is GKE Autopilot adding?

With GKE Autopilot, you no longer need to worry about the cluster nodes and what CPU/memory each node requires. Also since you no longer need to worry about nodes, there’s no need to guess how many nodes you need to run your workloads. And with that, you no longer need to guess how big your cluster nodes should be.

With GKE Autopilot, all you need to worry about is to have a bit more discipline in detailing the workloads that will run in the kubernetes cluster. This means that it is very important for your Kubernetes resources Yaml to have an accurate figure for how much vCPU and Memory each container requires, no more no less(more on that later).

With this in mind, instead of being charged a cost per node, you get charged a cost based on the total amount of CPU, memory, and ephemeral storage that is requested. The key here is, requested.

For example, see the following resource request for a wordpress pod :

...

containers:

- name: wordpress

resources:

requests:

memory: "128Mi"

cpu: "100m"

limits:

memory: "128Mi"

cpu: "100m"

In the YAML definition above we request for a 1/10 of a CPU core and a 128Mb of memory to be allocated to the WordPress container.

What you actually pay for, with GKE Autopilot, is the requested resources.

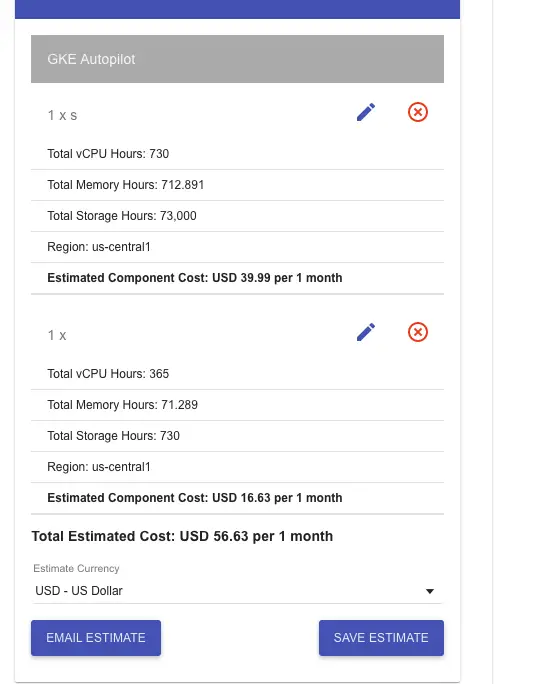

Just to have an idea of how much it would cost using GKE Autopilot, I did a completely random simulation for the potential cost of a workload that totals 730 vCPU hours and 712,891 memory hours for a single pod that requests a whole CPU core to itself. You end up paying at least 39.99 USD per month. Note that this value is just an estimation and varies between different GCP regions.

GKE AutoPilot Management Fee

Now we need to talk about the management fee. There is $0.10/hour/cluster management fee for a GKE Autopilot cluster to also pay.

If you only have one GKE Autopilot cluster, you receive a credit of $74.40 which covers the total of the management fee. This means that you have zero management fee in that case.

If you need to have more than one GKE AutoPilot cluster or any other cluster in the same project or in other projects linked to the same billing account, then the management fee kicks in.

Google thinks that for large customers they definitely save money because most of us are incredibly bad at estimating the correct amount of nodes and resources needed to run a production workload. I think I agree.

GKE Autopilot Restrictions

GKE Autopilot does have plenty of restrictions, however. Let’s just briefly discuss some of the ones that caught my attention. You can see an exhaustive list here.

You can’t modify kube-system namespace

First of all, you are not able to modify the kube-system namespace anymore. Therefore you will be prevented from adding new storage providers or do any changes related to networking.

No permissions to modify cluster nodes

Since you don’t have to worry about how many nodes you need to run, you no longer have access to modify anything to do with the nodes. You can actually see the nodes with Kubectl. But you can’t touch them.

No Node affinity pods anymore

Since you can’t control how many nodes your cluster has or when/how they run it is only natural that you no longer can use node affinity for your pods. Instead, you can rely on pod affinity if you need two or more pods to run in the same node.

A limited number of Storage Classes available to use

You are also limited from using many storage classes available in Kubernetes as that would require access privileges to the nodes.

Resource limits need to be equal to requested resources

Perhaps, this restriction is to make it easier for Google to bill you better, with GKE Autopilot, you lose the ability to set a resource limit higher than the requested CPU and memory resources. You need to ensure that you give from the start enough resources to your pod, no less, no more.

You might have noticed in the example YAML above that the requested resources(CPU and Memory) are equal to the upper limit of resources. This is not a mistake. If you try to set a higher limit, GKE Autopilot will just override your limit and make it equal to the requested value.

Security

We all love security, don’t we? GKE Autopilot comes with Workload Identity enabled and Shielded GKE Nodes, and there is no choice.

Shielded GKE nodes are great, not much to say against that. Who wants a potential attacker to pretend they are a node in your cluster.

However, being forced to use Workload Identity?

This is indeed more secure, but it also means that configuring access to GCP resources to using IAM service accounts will be a lot more involved and complicated.

This is all I have to say for now. I am currently working on my own POC using GKE Autopilot and will share any more findings soon!

Do look at the links below for further information. And feel free to comment in case I have missed something important, or if I am completely wrong!

Resources:

https://cloud.google.com/blog/products/containers-kubernetes/introducing-gke-autopilot

https://cloud.google.com/kubernetes-engine/docs/concepts/autopilot-overviewKubernetes Podcast from Google: Episode 139 – GKE Autopilot, with Yochay Kiriaty

LIN SUN: Yeah, it was in San Diego at one of the ports, and we went out for a cruise for, I think, two hours? And there…kubernetespodcast.com

https://cloud.google.com/blog/products/containers-kubernetes/introducing-gke-autopilot

Comments

2 responses to “What is Google Kubernetes Engine Autopilot and why it might(not) be for you”

How do you create a zonal AutoPilot cluster? https://cloud.google.com/kubernetes-engine/docs/concepts/types-of-clusters seems to indicate that you can only create regional clusters with AutoPilot.

Hi Bryan,

Thanks for the question. My mistake, I got confused with the pricing. The free credit applies to an autopilot cluster and it can only be regional. So you don’t have an option to select.

Which is great. Better deal than I initially thought.

Kind Regards,

Armindo